On the application of decoding/classification/MVPA approaches to ERP data

/If you pay any attention to the fMRI literature, you know that there has been a huge increase in the number of studies applying multivariate methods to the pattern of voxels (as opposed to univariate methods that examine the average activity over a set of voxels). For example, if you ask whether the pattern of activity across the voxels within a given region is different for faces versus objects, you’ll find that many areas carry information about whether a stimulus is a face or an object even if the overall activity level is no different for faces versus objects. This class of methods goes by several different names, including multivariate pattern analysis (MVPA), classification, and decoding. I will use the term decoding here, but I am treating these terms as if they are equivalent.

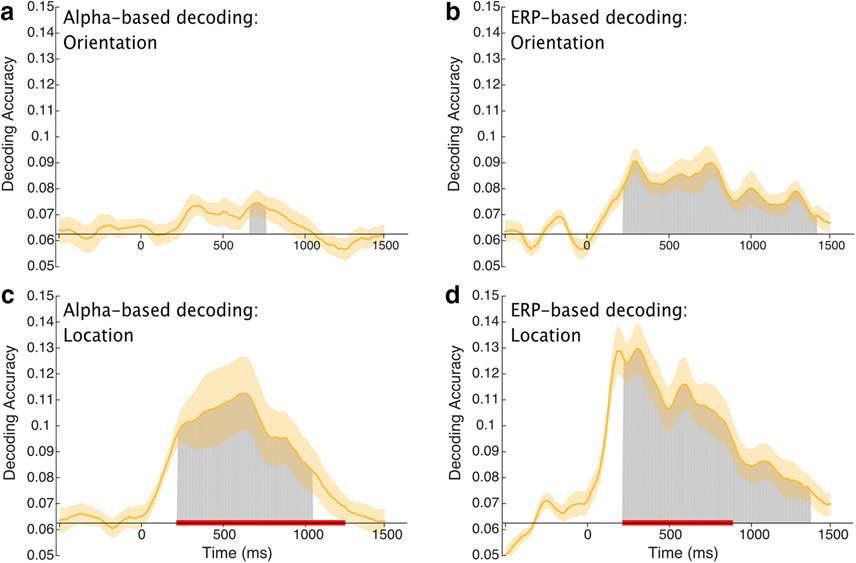

Gi-Yeul Bae and I have recently started applying decoding methods to sustained ERPs and to EEG oscillations (see this paper and this more recent paper), and others have also used them (especially in the brain-computer interface [BCI] field). We have found that decoding can pick up on incredibly subtle signals that would be missed by conventional methods, and I believe that decoding methods have the potential to open up new areas of ERP research, allowing us to answer questions that would ordinarily seem impossible (just as has happened in the fMRI literature). The goal of this blog post is to provide a brief introduction so that you can more easily read papers using these methods and can apply them to your own research.

There are many ways to apply decoding to EEG/ERP data, and I will focus on the approach that we have been using to study perception, attention, and working memory. Our goal in using decoding methods is to determine what information about a stimulus is represented in the brain at a given moment in time, and we apply decoding to averaged ERP waveforms to minimize noise and maximize our ability to detect subtle neural signals. This is very different from the BCI literature, where the goal is to reliably detect signals on single trials that can be used to control devices in real time.

To explain our approach, I will give a simple but hypothetical example. Our actual research examines much more complex situations, so this hypothetical example will be clearer. In this hypothetical study, we present subjects with a sequence 180 face photographs and 180 car photographs, asking them to simply press a single button for each stimulus. A conventional analysis will yield a larger N170 component for the faces than for the cars, especially over lateral occipitotemporal cortex.

Our decoding approach asks, for each individual subject, whether we can reliably predict whether the stimuli that generated a given ERP waveform were faces or cars. To do this, we will take the 180 face trials and the 180 car trials for a given subject and randomly divide them into 3 sets of 60 trials. This will give us 3 averaged face ERP waveforms and 3 averaged car ERP waveforms. We will then take 2 of the face waveforms and two of the car waveforms and feed them into a support vector machine (SVM), which is a powerful machine learning algorithm. The SVM “learns” how the face and car ERPs differ. We do this separately at each time point, feeding the SVM for that time point the voltage from each electrode site at that time point. In other words, the SVM learns how the scalp distribution for the face ERP differs from the scalp distribution for the car ERP at that time point (for a single subject). We then take the scalp distribution at this point in time from the 1 face ERP and the 1 car ERP that were not used to train the SVM, and we ask whether the SVM can correctly guess whether each of these scalp distributions is from a face ERP or a house ERP. We then repeat this process over and over many times using different subsets of trials to create the averaged ERPs used for training and for testing. We can then ask whether, over these many iterations, the SVM can guess whether the test ERP is from faces or cars above chance (50% correct).

This process is applied separately for each time point and separately for each subject, giving us a classification accuracy value for each subject at each time point (see figure below, which shows imaginary data from this hypothetical experiment). We then aggregate across subjects, yielding a waveform showing average classification accuracy at each time point, and we use the mass univariate approach to find clusters of time points at which the accuracy is significantly greater than chance.

In some sense, the decoding approach is the mirror image of the conventional approach. Instead of asking whether the face and car waveforms are significantly different at a given point in time, we are asking whether we can predict whether the waveforms come from faces or cars at a given point in time significantly better than chance. However, there are some very important practical differences between the decoding approach and the conventional approach. First, and most important, the decoding is applied separately to each subject, and we aggregate across subjects only after computing %correct. As a result, the decoding approach picks up on the differences between faces and cars at whatever electrode sites show a difference in a particular subject. By contrast, the conventional approach can find differences between faces and cars only to the extent that subjects have similar effects. Given that there are often enormous differences among subjects, the single-subject decoding approach can give us much greater power to detect subtle effects. A second difference is that the SVM effectively “figures out” the pattern of scalp differences that most optimally differentiates between faces and cars. That is, it uses the entire scalp distribution in a very intelligent way. This can also give us greater power to detect subtle effects.

In our research so far, we have been able to detect very subtle effects that never would have been statistically significant in conventional analyses. For example, we can determine which of 16 orientations is being held in working memory at a given point in time (see this paper), and we can determine which of 16 directions of motion is currently being perceived (see this paper). We can even decode the orientation that was presented on the previous trial, even though it’s no longer task relevant (see here). We have currently-unpublished data showing that we can decode face identity and facial expression, the valence and arousal of emotional scenes from the IAPS database, and letters of the alphabet (even when presented at 10 per second).

You can also use decoding to study between-group differences. Some research uses decoding to try to predict which group an individual belongs to (e.g., a patient group or a control group). This can be useful for diagnosis, but it doesn’t usually provide much insight into how the brain activity differs between groups. Our approach has been to use decoding to ask about the nature of the neural representations within each group. But this can be tricky, because decoding is highly sensitive to the signal-to-noise ratio, which may differ between groups for “uninteresting” reasons (e.g., more movement artifacts in one group). We have addressed these issues in this study that compares decoding accuracy in people with schizophrenia and matched control subjects.